Getting a Grip on Scope 3 Data Quality

We dive into measuring data quality. The GHG Protocol is exploring ways to boost scope 3 data quality by requiring companies to disclose how specific their data is. But there are real questions about feasibility.

Defining data quality

Making scope 3 data more actionable is a key focus of the GHG Protocol’s revision to the Scope 3 Standard. Data quality is often insufficient for companies to move beyond reporting to action.

A key challenge is a lack of specificity. According to SBTi, only 6% of companies use supplier-specific emission factors. This means most firms are relying on average-data or spend-based methods, which makes it difficult to distinguish between suppliers and act accordingly.

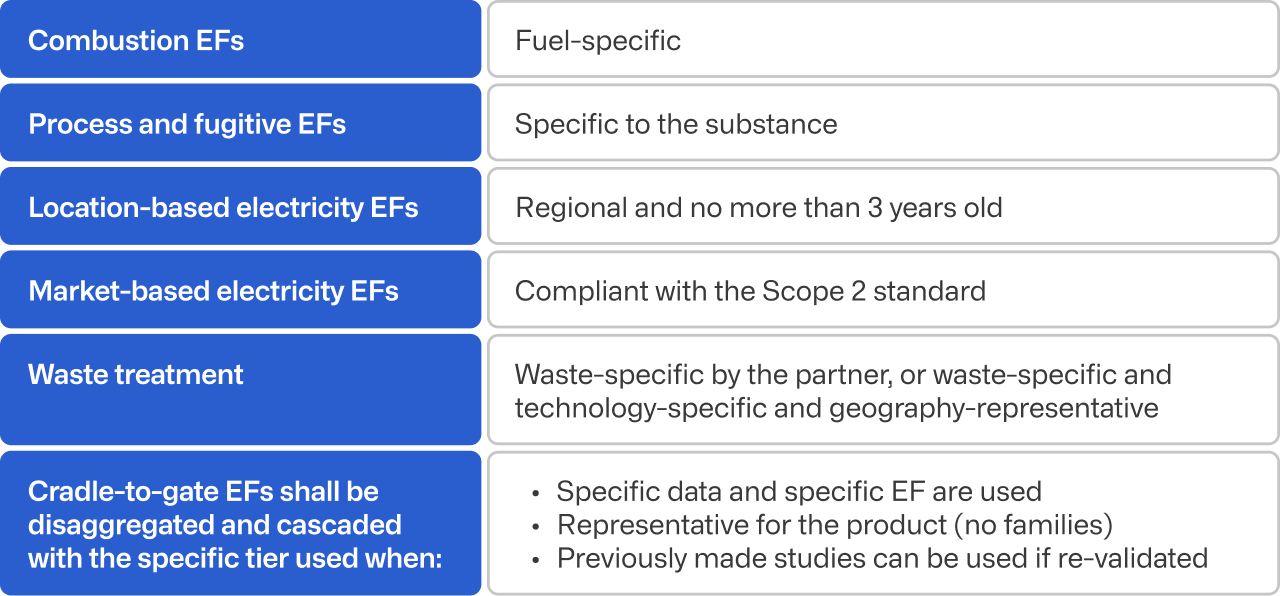

But data quality isn’t only about specificity. Data can be specific but lack accuracy - as the GHG Protocol explains (see Figure 2). For example, metered fuel data recorded with an incorrectly calibrated device is highly specific but inaccurate.

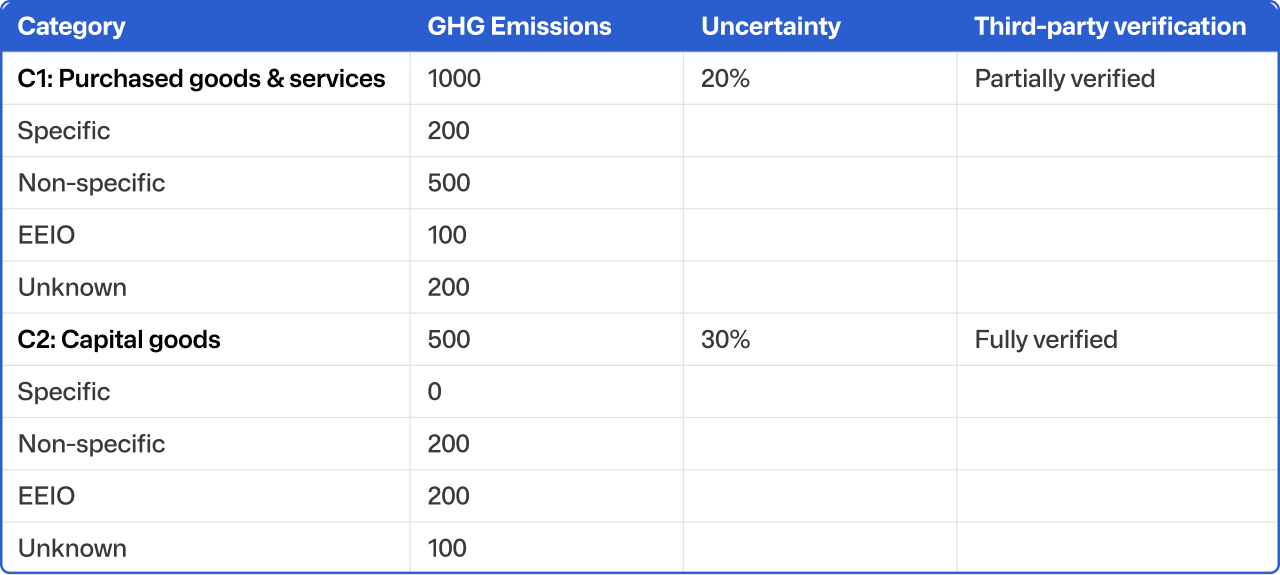

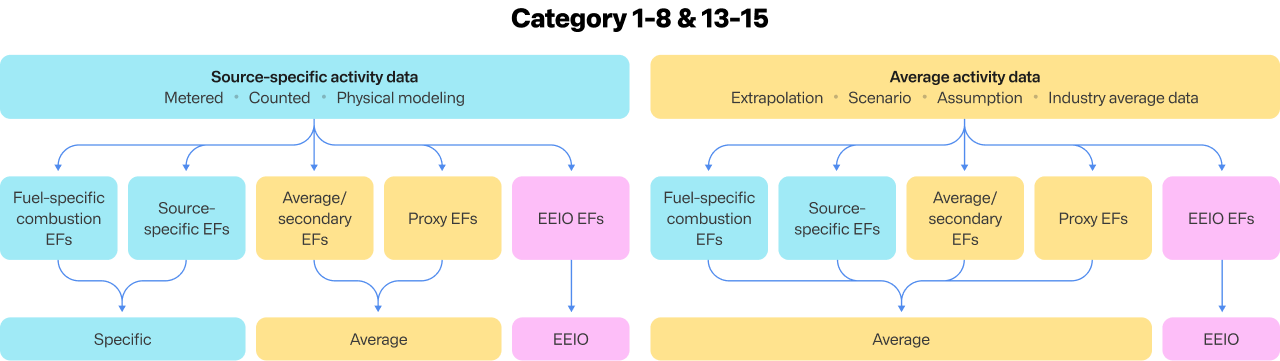

In fact, data quality encompasses several aspects: the extent to which data is complete, reliable, and representative (geographically, temporally, technologically). Different dimensions can impact overall data quality, such as the data source, methods used to calculate emissions, degree of uncertainty, as well as specificity.

Which dimension matters often depends on the use case - measuring overall size of emissions, reporting on achieved reductions, or driving action.

Communicating data quality

As we discussed in our April newsletter, a key challenge with scope 3 is communication. Companies are both preparers and users of emissions data. One company’s emissions are another’s scope 3 emissions, which in turn form part of someone else’s scope 3 emissions, and so on.

For emissions data to flow across the value chain, companies need to:

- Collect raw data, which involves sourcing and integrating company-specific and third-party data (whether reported emissions, primary or secondary activity data or emission factors)

- Transform this raw data into an organisational or product emissions footprint using appropriate methodologies

- Extract insights to drive decarbonisation, and

- Disclose their data as an input for others to do the same thing.

A key challenge for the GHG Protocol is devising a way to communicate data quality that enables this information to flow across the value chain, so that it is:

- Easy to interpret for data users: so firms can easily ascertain whether the data they are using for their scope 3 footprint is of sufficient quality to measure progress and inform action

- Easy to implement for data preparers: so firms can straightforwardly assess and communicate the quality of their scope 3 footprint

- Not subjective: avoids excessive interpretation and judgement.

Given the multiple dimensions of data quality, meeting these criteria is no easy task.